Building Real-Time Applications with Kafka and Spring Boot: A Beginner’s Guide

Introduction

In today’s fast-paced world, data streaming and real-time processing have become essential for modern applications, particularly for businesses that need to respond to customer actions as they happen. This is where Apache Kafka comes into play. Originally developed by LinkedIn and now an open-source project under Apache, Kafka is a distributed event-streaming platform designed to handle large volumes of data with high reliability and low latency. Integrating Kafka with Spring Boot makes it even easier to build robust, scalable, and real-time applications.

In this guide, we’ll cover:

- Key concepts in Kafka

- Setting up Kafka with Spring Boot

- A hands-on example: Creating producers and consumers

- Real-world use cases and job market insights

Why Kafka? And Why Now?

As a developer, understanding Kafka is incredibly valuable for several reasons:

- Job Demand: Data streaming skills are in high demand. Many companies are adopting Kafka to handle real-time data pipelines. Learning Kafka could open doors to opportunities in backend engineering, big data, and DevOps.

- Scalability: Kafka is built to handle big data. It scales horizontally, making it suitable for applications requiring high throughput.

- Decoupling Services: Kafka can act as a middleman between different services, enabling microservices to communicate asynchronously.

In short, mastering Kafka with Spring Boot is a significant asset for any developer looking to expand their skills.

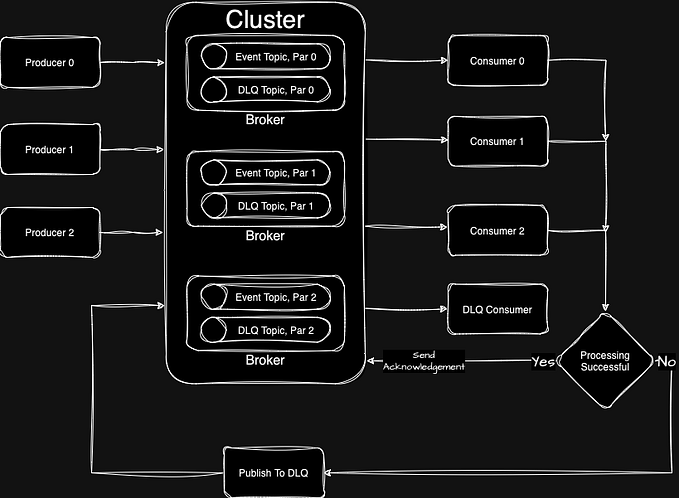

Key Kafka Concepts

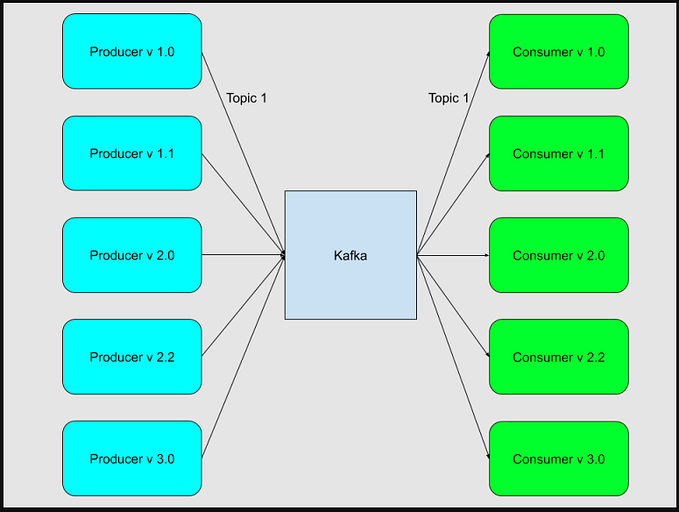

- Producer: Sends data (messages) to Kafka.

- Consumer: Reads data from Kafka.

- Broker: Kafka server where data is stored.

- Topic: Logical channel where producers send data, and consumers subscribe to read data.

- Partition: Kafka topics are split into partitions for scalability.

- Offset: Each message in a partition has an ID, allowing consumers to read data at specific points.

Setting Up Kafka with Spring Boot

Let’s dive into the setup. First, make sure you have:

- Kafka installed on your local machine or use a Kafka Docker image.

- Spring Boot project set up (you can use Spring Initializr for this).

Step 1: Add Kafka Dependencies

In your pom.xml file, add the necessary dependencies:

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<version>2.9.6</version> <!-- Use the latest version available -->

</dependency>Step 2: Configure Kafka Properties

Configure the Kafka settings in application.properties:

spring.kafka.bootstrap-servers=localhost:9092

spring.kafka.consumer.group-id=myGroup

spring.kafka.consumer.auto-offset-reset=earliest

spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializerStep 3: Write the Producer and Consumer

Writing the Kafka Producer

To send messages to Kafka, we’ll create a KafkaProducerService in Spring Boot.

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Service;@Service

public class KafkaProducerService { private static final String TOPIC = "example_topic"; @Autowired

private KafkaTemplate<String, String> kafkaTemplate; public void sendMessage(String message) {

kafkaTemplate.send(TOPIC, message);

}

}

The KafkaTemplate here is Spring's abstraction, making it easy to interact with Kafka. In a real-world scenario, you may want to send structured messages (like JSON), but for simplicity, we'll stick with strings.

Writing the Kafka Consumer

For the consumer, we’ll create a KafkaConsumerService to receive messages from Kafka.

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Service;@Service

public class KafkaConsumerService { @KafkaListener(topics = "example_topic", groupId = "myGroup")

public void consume(String message) {

System.out.println("Received message: " + message);

}

}

The @KafkaListener annotation allows this method to listen to messages from the example_topic.

Step 4: Creating a Controller to Test the Producer

Let’s add a simple REST controller to trigger our producer, which will send messages to Kafka when a request is made.

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;@RestController

public class MessageController { @Autowired

private KafkaProducerService producerService; @GetMapping("/send")

public String sendMessage(@RequestParam("message") String message) {

producerService.sendMessage(message);

return "Message sent to Kafka!";

}

}

Run your Spring Boot application, and try sending a GET request to localhost:8080/send?message=HelloKafka. You should see the message logged in your console if everything is working correctly.

Real-World Applications of Kafka

- E-commerce: Real-time order tracking and inventory updates.

- Banking: Fraud detection and transaction tracking.

- Social Media: Tracking user activities and recommendations.

- Telecom: Call data analysis and customer support.

Kafka, paired with Spring Boot, can simplify these tasks by allowing applications to process data as it flows in real-time, making it a valuable skill set for backend development, big data engineering, and microservices development.

Kafka Job Market Insights

Kafka expertise is increasingly sought after, especially in industries focusing on big data, real-time analytics, and microservices architecture. Here’s what you can expect in terms of roles:

- Backend Developer: Many backend positions now list Kafka as a desired skill, especially for those with Java and Spring Boot experience.

- Big Data Engineer: With the rise of data-driven decision-making, big data roles demand Kafka skills for managing and processing high-volume data.

- Cloud & DevOps Engineer: Kafka is often a part of cloud-based data pipelines, and it integrates well with cloud providers like AWS, Azure, and GCP.

Conclusion

Kafka, combined with Spring Boot, is a powerhouse for building real-time applications. Whether you’re handling streams of data in e-commerce, finance, or social media, Kafka is a skill that’s in demand. So, experiment with Kafka, build small projects, and add these skills to your portfolio. Mastering Kafka will not only make you a strong backend developer but also open up career paths in data engineering, cloud, and more.

If you’re new to streaming and real-time applications, this combination of Kafka with Spring Boot is an excellent starting point.